Fixing the chatbot through better architecture · Project

As part of our AI adoption efforts, we decided to build a chatbot that could understand the data stored in any tenant's workspace. In simple terms, a tenant is a client of Lyearn, and each tenant can have multiple users and admins. A workspace is just a way to separate one tenant’s data from another. The chatbot needed to work with all types of Lyearn content, like courses, articles, and more. This chatbot would first be showcased at a major conference at Sprinklr, our largest client.

A Last-Minute Crisis

I wasn't originally part of the development team for this project. We had decided to reuse Lyearn’s existing “Community” infrastructure—kind of like Slack—to handle message storage and sharing. Everything seemed to be on track until, just two weeks before our deadline, I got a call about major bugs breaking the platform.

AI responses were randomly disappearing and reappearing. The chat window’s scroll position was unstable, making the experience frustrating. We started by fixing bugs as they came up, but the more we patched, the more new bugs appeared. Two problems stood out:

The chat window’s scroll position kept jumping unpredictably.

There was a gap between when an AI-generated message was created and when it was registered in the system, during which the message would disappear from the screen.

The initial look and feel of the chatbot

The initial look and feel of the chatbotUnderstanding the Root Problem

At first, I tried patching things up for two days, but we weren’t making real progress. So, I spent the weekend understanding the existing system design in depth. What I found was shocking—the chat was built on a messy architecture that wasn’t designed to handle AI responses well.

Here’s how things worked:

The chat window was treated as a messaging channel (like a Slack channel).

When a user sent their first message, the system first created a new channel (

createChannelAPI), then sent the message (createPostAPI).The message appeared on the screen only after the system processed these API calls, which caused a noticeable delay.

The AI response was generated through a GraphQL subscription, which streamed the response in real-time.

Once the streaming finished, we made another

createPostAPI call, marking the sender as "AI."At this point, the AI response disappeared from the screen and then reappeared once the system refreshed the chat history 🤦♂️.

This setup was built to reuse existing infrastructure, but it wasn’t designed for a smooth chatbot experience.

Proposing a New Architecture

I realized that if we restructured the frontend properly, most of these bugs would disappear on their own. I brought up the idea in a team meeting, and people were (suprisingly) open to it, despite being an additional, unplanned effort. It seemed like they had hesitated to bring it up since so much effort had already gone into the current setup. Once I suggested a new approach, others voiced their support, and I took the lead on re-architecting the frontend.

The New Solution

Before making it an AI chatbot, we needed to first build a solid chat application. Here’s what we changed:

A new

ChatViewcomponent: This component would treat the application as a messaging app first and foremost, and all functional logic and integrations were extracted out of it. It received amessageListarray to display, and each message was simplified to the minimum required data.Message List as the Source of Truth: Instead of relying on API calls to determine what appears on the screen, we created a

messageListarray in the frontend as the single source of truth. This ensured messages didn’t randomly disappear.Immediate Message Display:

When a user sent a message, it was instantly added to

messageListand displayed.The backend API call still happened, but the message wouldn’t be removed while waiting for confirmation.

We generated a unique ID in the frontend (using a

bsonpackage) and sent it to the backend, so when the backend registered the message, we could match it with our existing list instead of replacing everything.

Fixing AI Response Handling:

AI responses were still streamed in real-time.

Once the full response was generated, we pushed it directly into

messageList.Then, we made the createPost API call.

When the backend later returned the same AI message, we used the ID to match and avoid replacing it unnecessarily.

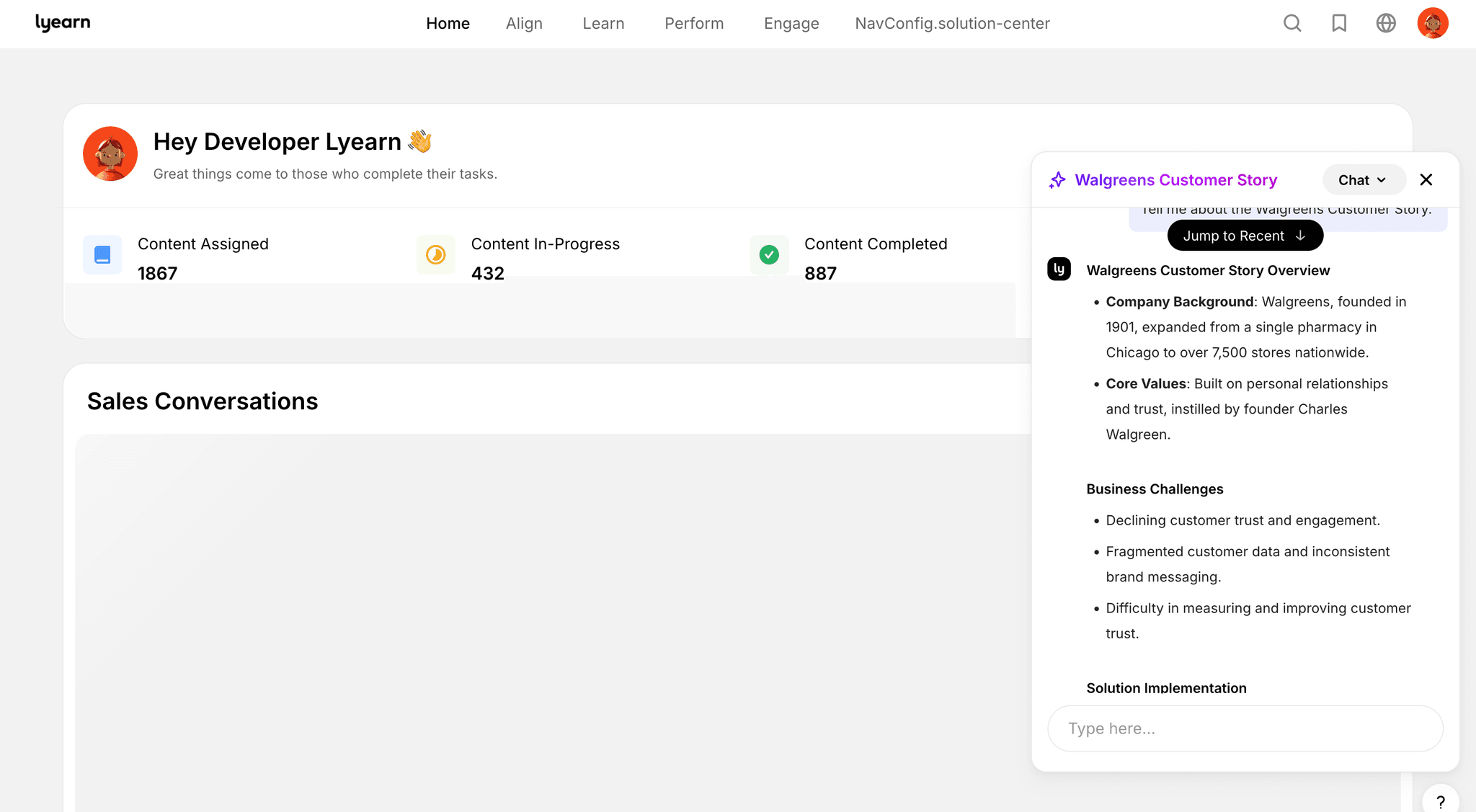

Chatbot with fixed UX. We also made it larger.

Chatbot with fixed UX. We also made it larger.This approach made the frontend much more stable, faster, and predictable.

The Results

With this fix, most of our major bugs vanished instantly. We could now confidently scale the chatbot, and adding new features became much easier. Later, we introduced:

Regenerating responses (asking AI to try again).

A full-screen chat experience.

Note-taking within the chat window.

A "Save to Notes" button for useful messages.

Displaying information sources for AI responses.

Pinning important messages.

Within two months, this chatbot became part of a much larger corporate knowledge management system. Our first client, Sprinklr, gave exceptional feedback, and soon, we began pitching and rolling it out to other Lyearn clients. This chatbot became a major focus area for the entire team and one of Lyearn’s most successful AI-driven features.

The current chatbot application in all its glory

The current chatbot application in all its gloryWatch the demo at Sprinklr here (chatbot first shown at 3:00):